Reliable UDP (RUDP): The Next Big Streaming Protocol?

All too often we shy away from the depths of IP protocols, leaving the application vendors such as Microsoft; Wowza Media Systems, LLC; RealNetworks, Inc.; Adobe Systems, Inc.; and others with more specific skills to deal with the dark art of the network layer for us, while we just type in the server name, hit connect, then hit start.

Those who have had a little experience will probably have heard of TCP (transmission control protocol) and UDP (user datagram protocol). They are transport protocols that run over IP links, and they define two different ways to send data from one point to another over an IP network path. TCP running over IP is written TCP/IP; UDP in the same format is UDP/IP.

TCP has a set of instructions that ensures that each packet of data gets to its recipient. It is comparable to recorded delivery in its most basic form. However, while it seems obvious at first that "making sure the message gets there" is paramount when sending something to someone else, there are a few extra considerations that must be noted. If a network link using TCP/IP notices that a packet has arrived out of sequence, then TCP stops the transmission, discards anything from the out-of-sequence packet forward, sends a "go back to where it went wrong" message, and starts the transmission again.

If you have all the time in the world, this is fine. So for transferring my salary information from my company to me, I frankly don't care if this takes a microsecond or an hour, I want it done right. TCP is fantastic for that.

In a video-centric service model, however, there is simply so much data that if a few packets don't make it over the link there are situations where I would rather skip those packets and carry on with the overall flow of the video than get every detail of the original source. Our brain can imagine the skipped bits of the video for us as long as it's not distracted by jerky audio and stop-motion video. In these circumstances, having an option to just send as much data from one end of the link to the other in a timely fashion, regardless of how much gets through accurately, is clearly desirable. It is for this type of application that UDP is optimal. If a packet seems not to have arrived, then the recipient waits a few moments to see if it does arrive -- potentially right up to the moment when the viewer needs to see that block of video -- and if the buffer gets to the point where the missing packet should be, then it simply carries on, and the application skips the point where the missing data is, carrying on to the next packet and maintaining the time base of the video. You may see a flicker or some artifacting, but the moment passes almost instantly and more than likely your brain will fill the gap.

If this error happens under TCP then it can take TCP upward of 3 seconds to renegotiate for the sequence to restart from the missing point, discarding all the subsequent data, which must be requeued to be sent again. Just one lost packet can cause an entire "window" of TCP data to be re-sent. That can be a considerable amount of data, particularly when the link is known as a Long Fat Network link (LFN or eLeFaNt; it's true -- Google it!).

All this adds overhead to the network and to the operations of both computers using that link, as the CPU and network card's processing units have to manage all the retransmission and sync between the applications and these components.

For this reason HTTP (which is always a TCP transfer) generally introduces startup delays and playback latency, as the media players need to buffer more than 3 seconds of playback to manage any lost packets.

Indeed, TCP is very sensitive to something called window size, and knowing that very few of you ever will have adjusted the window size of your contribution feeds as you set up for your live Flash Streaming encode, I can estimate that all but those same very few have been wasting available capacity in your network links. You may not care. The links you use are good enough to do whatever it is you are trying to do.

In today's disposable culture of "use and discard" and "don't fix and reuse," it's no surprise that most streaming engineers just shrug and assume that the ability to get more bang for your buck out of your internet connection is beyond your control.

For example, did you know that if you set your maximum transmission unit (MTU) -- ultimately your video packet size -- too large then the network has to break it in two in a process called fragmentation? Packet fragmentation has a negative impact on network performance for several reasons. First, a router has to perform the fragmentation -- an expensive operation. Second, all the routers in the path between the router performing the fragmentation and the destination have to carry additional packets with the requisite additional headers.

Also, in the event of a retransmission, larger packets increase the amount of data you need to resend if a retransmission occurs.

Alternatively, if you set the MTU too small then the amount of data you can transfer in any one packet is reduced and relatively increases the amount of signaling overhead (the data about the sending of the data, equivalent to the addresses and parcel tracking services in real post). If you set the MTU as small as you can for an Ethernet connection, you could find that the overhead nears 50% of all traffic.

UDP offers some advantages over TCP. But UDP is not a panacea for all video transmissions.

Where you are trying to do large-video file transfer, UDP should be a great help, but its lossy nature is rarely acceptable for stages in the workflow that require absolute file integrity. Imagine studios transferring master encodes to LOVEFiLM or Netflix for distribution. If that transfer to the LOVEFiLM or Netflix playout lost packets then every single subscriber of those services would have to accept that degraded master copy as the best possible copy. In fact, if UDP was used in these back-end workflows, the content would degrade the user's experience in the same way that historically tape-to-tape and other dubbed and analog replication processes used to. Digital media would lose that perfect replica quality that has been central to its success.

Getting back to the focus on who may want to reduce their network capacity inefficiencies: Studios, playouts, news desks, broadcast centers, and editing suites all want their video content intact/lossless, but naturally they want to manipulate that data between machines as fast as possible. Having video editors drinking coffee while videos transfer from one place to another is inefficient (even if the coffee is good).

Given they cannot operate in a lossy way, are these production facilities stuck with TCP and all the inherent inefficiencies that come with the reliable transfer? Because TCP ensures all the data gets from point to point, it is called a "reliable" protocol. In UDP's case, that reliability is "left to the user," so UDP in its native form is known as an "unreliable" protocol.

The good news is that there are indeed options out there in the form of a variety of "reliable UDP" protocols, and we'll be looking at those in the rest of this article. One thing worth noting at the outset, though, is that if you want to optimize links in your workflow, you can either do it the little-bit-hard way and pay very little, or you can do it the easy way and pay a considerable amount to have a solution fitted for you.

Reliable UDP transports can offer the ideal situation for enterprise workflows -- one that has the benefit of high-capacity throughput, minimal overhead, and the highest possible "goodput" (a rarely used but useful term that refers to the part of the throughput that you can actually use for your application's data, excluding other overheads such as signaling). In the Internet Engineering Task Force (IETF) world, from which the IP standards arise, for nearly 30 years there has been considerable work in developing reliable data transfer protocols. RFC-908, dating from way back in 1984, is a good example.

Essentially, RDP (reliable data protocol) was proposed as a transport layer protocol; it was positioned in the stack as a peer to UDP and TCP. It was proposed as an RFC (request for comment) but did not mature in its own right to become a standard. Indeed, RDP appears to have been eclipsed in the late 1990s by the Reliable UDP Protocol (RUDP), and both Cisco and Microsoft have released RUDP versions of their own within their stacks for specific tasks. Probably because of the "task-specific" nature of RUDP implementations, though, RUDP hasn't become a formal standard, never progressing beyond "draft" status.

One way to think about how RUDP types of transport work is to use a basic model where all the data is sent in UDP format, and each missing packet is indexed. Once the main body of the transfer is done, the recipient sends the sender the index list and the sender resends only those packets on the list. As you can see, because it avoids the retransmission of any windows of data that have already been sent that immediately follow a missed packet, this simple model is much more efficient. However, it couldn't work for live data, and even for archives a protocol must be agreed upon for sending the index. It responds to that rerequest in a structured way (which could result in a lot of random seek disc access, for example, if it was badly done).

There are many reasons the major vendor implementations are task-specific. For example, where one may use UDP to avoid TCP retransmission after errors, if the entire data must be faultlessly delivered to the application, one needs to actually understand the application.

If the application requires control data to be sent, it is important for the application to have all the data required to make that decision at any point. If the RUDP system (for example) only looked for and re-requested all the missing packets every 5 minutes (!) then the logical operations that lacked the data could be held up waiting for that re-request to complete. This could break the key function of the application if the control decision needed to be made sooner than within 5 minutes.

On the other hand, if the data is a large archive of videos being sent overnight for precaching at CDN edges, then it may be that the retransmission requests could be managed during the morning. So the retransmission could be delayed until the entire archive has been sent, following up with just the missing packets on a few iterations until all the data is delivered. So the flow, in this case, has to have some user-determined and application-specific control.

TCP is easy because it works in all cases, but it is less efficient because of that. On the other hand, UDP either needs its applications to be resilient to loss or the application developer needs to write in a system for ensuring that missing/corrupted packets are retransmitted. And such systems are in effect proprietary RUDP protocols.

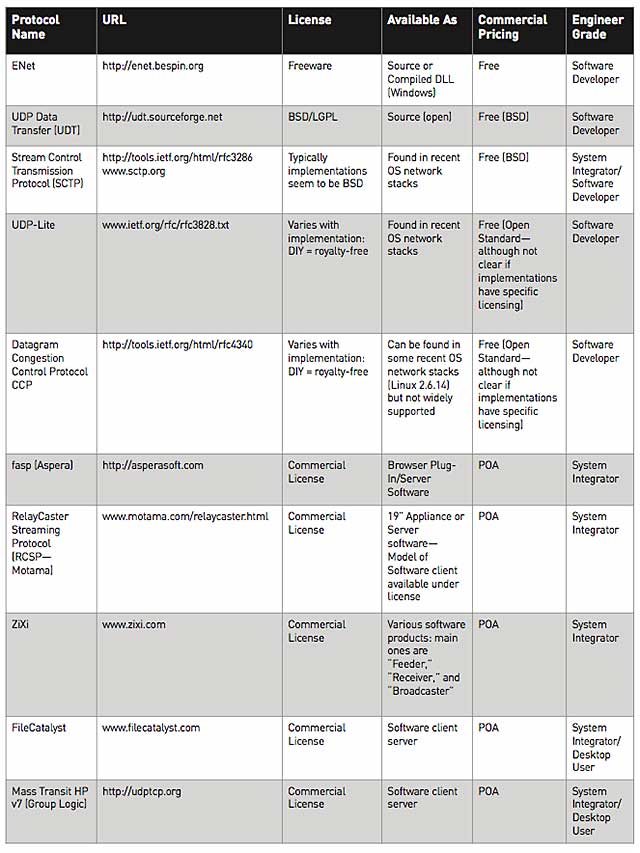

There is an abundance of these, both free and open source, and I am going to look at several of each option (Table 1). Most of you who use existing streaming servers will be tied to the streaming protocols that your chosen vendor offers in its application. However, for those of you developing your own streaming applications, or bespoke aspects of workflows yourselves, this list should be a good start to some of the protocols you could consider. It will also be useful for those of you who are currently using FTP for nonlinear workflows, since the swap out is likely to be relatively straightforward given than most nonlinear systems do not have the same stage-to-stage interdependence that linear or live streaming infrastructures do.

Let's zip (and I do mean zip) through this list. Note that it is not meant to be a comprehensive selection but purely a sampler.

The first ones to explore in my mind are UDP-Lite and Datagram Congestion Control Protocol. These two have essentially become IETF standards, which means that inter-vendor operation is possible (so you won't get locked into a particular vendor).

Table 1: A Selection of Reliable UDP Transports

DCCP

Let's look at DCCP first. DCCP provides initial code implementations for those inclined. From the point of view of a broadcast video engineer, this is really deeply technical stuff for low-level software coders. However, if you happen to be

(or simply have access to) engineers of this skill level then DCCP is freely available. DCCP is a protocol worth considering if you are using shared network infrastructure (as opposed to private or leased line connectivity) and want to ensure you get as much throughput as UDP can enable, while also ensuring that you "play fair" with other users. It is worth commenting that "just turning on UDP" and filling the wire up with UDP data with no consideration of any other user on the wire can saturate the link and effectively make it unusable for others. This is congestion, but DCCP manages to fill the pipe as much as possible, while still inherently enabling other users to use the wire too.

Some of the key DCCP features include the following:

- Adding a reliability layer to UDP

- Discovery of the right MTU size is part of the protocol design (so you fill the pipe while avoiding fragmentation)

- Congestion control

Indeed, to quote the RFC: "DCCP is intended for applications such as streaming media that can benefit from control over the tradeoffs between delay and reliable in-order delivery."

UDP-Lite

The next of these protocols is UDP-Lite. Also an IETF standard, this nearly-identical-to-UDP protocol differs in one key way: It has a checksum (a number that is the result of a logical operation performed on all the data, which if it differs after a transfer indicates that the data is corrupt) and a checksum coverage range that that checksum applies to, whereas vanilla UDP -- optionally in IPv4, and always in IPv6 -- has just a simple checksum on the whole datagram and if present the checksum covers the entire payload.

Let's simplify that a little: What this means is that in UDP-Lite you can define part of the UDP datagram as something that must arrive with "integrity," i.e., a part that must be error-free. But another part of the datagram, for example the much bigger payload of video data itself, can contain errors (remain unchecked against a checksum) since it could be assumed that the application (for example, the H.264 codec) has error handling or tolerance in it.

This UDP-Lite method is very pragmatic. In a noisy network link, the video data may be subject to errors but could be the larger part of the payload, where the important sequence number may only be a smaller part of the data (statistically less prone to errors). If it fails, the application can use UDP-Lite to request a resend of that packet. Note that it is up to the application to request the resend; the UDP-Lite protocol simply flags the failure up and the software can prioritize a resend request, or it can simply plan to work around a "discard" of the failed data. It is also worth noting that most underlying link layer protocols such as Ethernet or similar MAC-based systems may discard damaged frames of data anyway unless something interfaces with those link layer devices. So to work reliably, UDP-Lite needs to interface with the network drivers to "override" these frame discards. This adds complexity to the deployment strategy and certainly most likely takes the opportunity away from being "free." However, it's fundamentally possible.

So I wanted to see what was available "ready to use" for free, or close to free at least. I went looking for a compiled, user-friendly, simple-to-use application with a user-friendly GUI, thinking of the videographers having to learn all this code and deep packet stuff just to upload a video to the office.

UDPXfer

While it's not really a protocol per se, I found UDPXfer, a really simple application with just a UDP "send" and "listener" mode for file transfer.

I set up the software on my laptop and a machine in Amazon EC2, fiddled with the firewall, and sent a file. I got very excited about the prompt 5MB UDP file transfer taking 2 minutes and 27 seconds, and I then set up an FTP of the same file over the same link but was disappointed that the FTP took 1 minute and 50 seconds -- considerably faster. When I looked deeper, however, the UDPXfer sender had a "packets per second" slider. I then nudged the slider to its highest setting, but it was still only essentially 100Kbps maximum, far slower than the effective TCP. So I wrote to the developer, Richard Stanway, about this ceiling. He sent a new version that allowed me to set a 1300 packets-per-second transmission. He commented that it would saturate the IP link from me to the server, and in a shared network environment a better approach would be to the tune the TCP window's size to implement some congestion control. His software was actually geared to resiliency over noisy network links that cause problems for TCP.

Given that I see this technology being used on private wires, the effective saturation that Stanway was concerned about was less of a concern for my enterprise video workflow tests, so I decided to give the new version a try. As expected, I managed to bring the transfer time down to 1 minute and 7 seconds.

So while the software I was using is not on general release, it is clearly possible to implement simple software-only UDP transfer applications that can balance reliability with speed to find a maximum goodput.

Commercial Solutions

But what of the commercial vendors? Do they differentiate significantly enough from "free" to cause me to reach into my pocket?

I caught up with Aspera, Inc. and Motama GmbH, and I also reached out to ZiXi. All of this software is complex to procure at the best of times, so sadly I haven't had a chance to play practically with these. Also, the vendors do not publish rate cards, so it's difficult to comment on their pricing and value proposition.

Aspera co-presented at a recent Amazon conference with my company, and we had an opportunity to dig into its technology model a bit. Aspera is indeed essentially providing variations on the RUDP theme. It provides protocols and applications that sit on top of those protocols to enable fast file distribution over controlled network links. In Aspera's case, it was selling in behind Amazon Web Services Direct Connect to offer optimal upload speeds. It has a range of similar arrangements in place targeting enterprises that handle high volumes of latency-sensitive data. You can license the software or, through the Amazon model, pay for the service by the hour as a premium AWS service. This is a nice flexible option for occasional users.

Aspera provides variations on the RUDP theme, including fasp 3, which the company introduced at this year's IBC in Amsterdam.

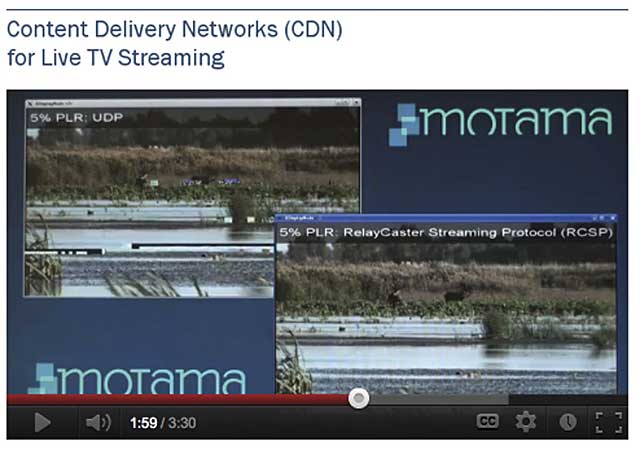

I had a very interesting chat with the CEO of Motama, which has a very appliance-based approach to its products. The RUDP-like protocol (called RelayCaster Streaming Protocol or RCSP) is used internally by the company's appliances to move live video from the TVCaster origination appliances to RelayCaster devices. These then can be hierarchically set up in a traditional hub and spoke or potentially other more complex topologies. The software is available (under license) to run on server platforms of your choice, which is good for data center models. They have also recently started to look at licensing the protocol to a wider range of client devices, and they pride themselves in being available for set-top boxes.

Motama offers an appliance-based" approach to its RUDP-like protocol, which it calls RelayCaster Streaming Protocol and which is available for set-top boxes and CDN licensing.

The last player in the sector I wanted to note was ZiXi. While I briefly spoke with ZiXi representatives while writing this, I didn't manage to communicate properly before my deadline, so here is what I know from the company's literature and a few customer comments: ZiXi offers a platform that optimizes video transfer for OTT, internet, and mobile applications. The platform obviously offers a richer range of features than just UDP-optimized streaming, and it has P2P negotiation and transmuxing so you can flip your video from standards such as RTMP out to MPEG-TS, as you can with servers such as Wowza. Internally, within its own ecosystem, the company uses its own hybrid ZiXi protocol, including features such as forward error correction, combining applications layer software in a product called Broadcaster that looks like a server with several common muxes (RTMP, HLS, etc.) and includes ZiXi. If you have an encoder with ZiXi running, then you can contribute directly to the server using the company's RUDP-type transport.

In addition to UDP-optimized streaming, ZiXi offers P2P negotiation and transmuxing, similar to servers from RealNetworks and Wowza.

Worth the Cost?

I am aware none of these companies licenses their software trivially. The software packages are their core intellectual properties, and defending them is vital to the companies' success. I also realize that some of the problems that they purport to address may "go away" when you deploy their technology, but in all honesty, that may be a little like replacing the engine of your car because a spark plug is misfiring.

I am left wondering where the customer can find the balance between the productivity gains in accelerating his or her workflow with these techniques (free or commercial) against the cost of a private connection plus either the cost of development time to implement one of the open/free standards or the cost of buying a supported solution.

The pricing indication I have from a few undisclosed sources is that you need to be expecting to spend a few thousand on the commercial vendor's licensing, and then more for applications, appliances, and support. This can quickly rise to a significant number.

This increased cost to improve the productivity of your workflow must be at some considerable scale, since I personally think that a little TCP window sizing, and perhaps paying for slightly "fatter" internet access, may resolve most problems -- particularly in archive transfer and so on -- and is unlikely to cost thousands.

However, at scale, where those optimizations start to make a significant productivity difference, it clearly makes a lot of sense to engage with a commercially supported provider to see if its offering can help.

At the end of the day, regardless of the fact that with a good developer you can do most things for free, there are important drivers in large businesses that will force an operator to choose to pay for a supported, tested, and robust option. For many of the same reasons, Red Hat Linux was a premium product, despite Linux itself being free.

I urge you to explore this space. To misquote James Brown: "Get on the goodput!"

This article appears in the Winter 2012 issue of Streaming Media European Edition as "Get on the Goodput."

Related Articles

Will 2022 be the year UDP finally shows its streaming mettle? The Quick UDP Internet Connections (QUIC) protocol might make the difference. First, though, OTT platforms need to make technical decisions about HTTP/3 that could further fragment the market.

01 Sep 2021